Human beings have been blaming strange behaviour on the full moon for centuries. In the Middle Ages, for example, people claimed that a full moon could turn humans into werewolves. In the 1700s, it was common to believe that a full moon could cause epilepsy or feverish temperatures. We even changed our language to match our beliefs. The word lunatic comes from the Latin root word ‘luna’, which means moon.

Today, we have (mostly) come to our sanities. While we no longer blame sickness and disease on the phases of the moon, we will hear people use it as a casual explanation for outlandish behaviour. For example, a common story in medical circles is that during a chaotic evening at the hospital one of the nurses will often say, “Must be a full moon tonight.”

There is little evidence that a full moon actually impacts our behaviours. A complete analysis of more than 30 peer-reviewed studies found no correlation between a full moon and hospital admissions, lottery ticket pay-outs, suicides, traffic accidents, crime rates, and many other common events. But here’s the interesting thing: even though the research says otherwise, a 2005 study revealed that 7 out of 10 nurses still believed that “a full moon led to more chaos and patients that night.”

How is that possible? The nurses who swear that a full moon causes strange behavior aren’t stupid. They are simply falling victim to a common mental error that plagues all of us. Psychologists refer to this little brain mistake as an “illusory correlation.”

How We Fool Ourselves Without Realizing It

An illusory correlation happens when we mistakenly over-emphasize one outcome and ignore the others. For example, let’s say we visit Mumbai City and someone cuts us off as we’re boarding the subway train. Then, we go to a restaurant and the waiter is rude to us. Finally, we ask someone on the street for directions and they blow us off. When we think back on our trip to Mumbai, it is easy to remember these experiences and conclude that “people from Mumbai are rude” or “people in big cities are rude.”

However, we are forgetting about all of the meals we ate when the waiter acted perfectly normal or the hundreds of people we passed on the Subway platform who didn’t cut us off. These were literally non-events because nothing notable happened. As a result, it is easier to remember the times someone acted rudely toward you than the times when you dined happily or took the subway in peace.

Here’s where the brain science comes into play: . . . . . Hundreds of psychology studies have proven that we tend to overestimate the importance of events we can easily recall and underestimate the importance of events we have trouble recalling. The easier it is to remember, the more likely we are to create a strong relationship between two things that are weakly related or not related at all.

The Genesis

Our ability to think about causes and associations is fundamentally important, and always has been for our evolutionary ancestors – we needed to know if a particular berry makes us sick, or if a particular cloud pattern predicts bad weather. So it is not surprising that we automatically make judgements of this kind. We don’t have to mentally count events, tally correlations and systematically discount alternative explanations. We have strong intuitions about what things go together, intuitions that just spring to mind, often after very little experience. This is good for making decisions in a world where you often don’t have enough time to think before you act, but with the side-effect that these intuitions contain some predictable errors. One such error is illusory correlation. Two things that are individually salient seem to be associated when they are not.

One explanation is that things that are relatively uncommon are more vivid (because of their rarity). This, and an effect of existing stereotypes, creates a mistaken impression that the two things are associated when they are not. This is a side effect of an intuitive mental machinery for reasoning about the world. Most of the time it is quick and delivers reliable answers – but it seems to be susceptible to error when dealing with rare but vivid events, particularly where preconceived biases operate. Associating bad traffic behaviour with ethnic minority drivers, or cyclists, is another case where people report correlations that just are not there. Both the minority (either an ethnic minority, or the cyclists) and bad behaviour stand out. Our quick-but-dirty inferential machinery leaps to the conclusion that the events are commonly associated, when they are not.

Self Perspective

Sometimes we feel like the whole world is against us. The other lanes of traffic always move faster than ours. Traffic signals are always red when we are in a hurry. The same goes for the supermarket queues. Why does it always rain on those occasions we do not carry an umbrella, and why do flies always want to eat our sandwiches at a picnic and not other people’s? It feels like there is only one reasonable explanations. The universe itself has a vendetta against us and we get back to the universe-victim theory.

So here we have a mechanism which might explain our woes. The other lanes or queues moving faster is one salient event, and our intuition wrongly associates it with the most salient thing in our environment – us (Self). What, after all, is more important to us than ourselves. Which brings us back to the universe-victim theory. When our lane is moving along we are focusing on where we are going, ignoring the traffic we overtake. When our lane is stuck we think about us and our hard luck, looking at the other lane. No wonder the association between self and being overtaken sticks in memory more.

This distorting influence of memory on our judgement lies behind a good chunk of our feelings of victimization. In some situations there is a real bias. We really do spend more time being overtaken in traffic than we do overtaking. And the smoke really does tend follow us around the campfire, because wherever we sit creates a warm up-draught that the smoke fills. But on top of all of these is a mind that over-exaggerates our own importance, giving each of us the false impression that we are more important in how events work out than we really are.

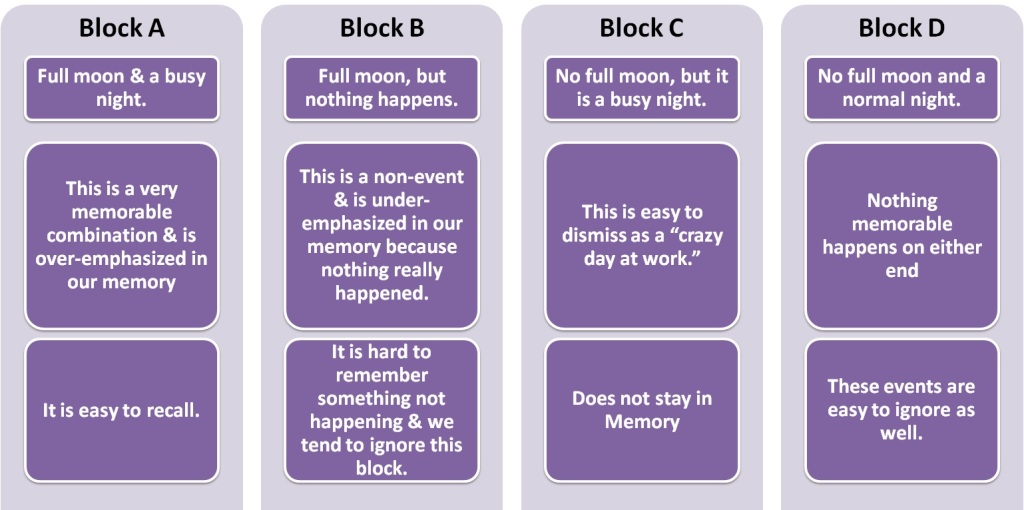

How to Spot an Illusory Correlation: . . . . . . . . . There is a simple strategy we can use to spot our hidden assumptions and prevent ourselves from making an illusory correlation. It’s called a contingency table and it forces you to recognize the non-events that are easy to ignore in daily life.

Let’s break down the possibilities for having a full moon and a crazy night of hospital admissions.

This contingency table helps reveal what is happening inside the minds of nurses during a full moon. The nurses quickly remember the one time when there was a full moon and the hospital was overflowing, but simply forget the many times there was a full moon and the patient load was normal. Because they can easily retrieve a memory about a full moon and a crazy night and so they incorrectly assume that the two events are related. Ideally, we would plug in a number into each cell so that we can compare the actually frequency of each event, which will often be much different than the frequency we easily remember for each event.

How to Fix Your Misguided Thinking

We make illusory correlations in many areas of life: . . .. . . . . . . We hear about Dirubhai Ambani or Bill Gates dropping out of college to start a billion-dollar business and we over-value that story in our head. Meanwhile, we never hear about all of the college dropouts that fail to start a successful company. We only hear about the hits and never hear about the misses even though the misses far outnumber the hits.

We see someone of a particular ethnic or racial background getting arrested and so you assume all people with that background are more likely to be involved in crime. We never hear about the 99 percent of people who don’t get arrested because it is a non-event.

We hear about a shark attack on the news and refuse to go into the ocean during our next beach vacation. The odds of a shark attack have not increased since we went in the ocean last time, but we never hear about the millions of people swimming safely each day. The news is never going to run a story titled, “Millions of Tourists Float in the Ocean Each Day.” We over-emphasize the story we hear on the news and make an illusory correlation.

Most of us are unaware of how our selective memory of events influences the beliefs we carry around with us on a daily basis. We are incredibly poor at remembering things that do not happen. If we don’t see it, we assume it has no impact or rarely happens. If we understand how an illusory correlation error occurs and use strategies like the Contingency Table Test mentioned above, we can reveal the hidden assumptions we didn’t even know we had and correct the misguided thinking that plagues our everyday lives.

Even Shakespeare blamed our occasional craziness on the moon. In his play Othello he wrote, “It is the very error of the moon. She comes more near the earth than she was wont. And makes men mad.”

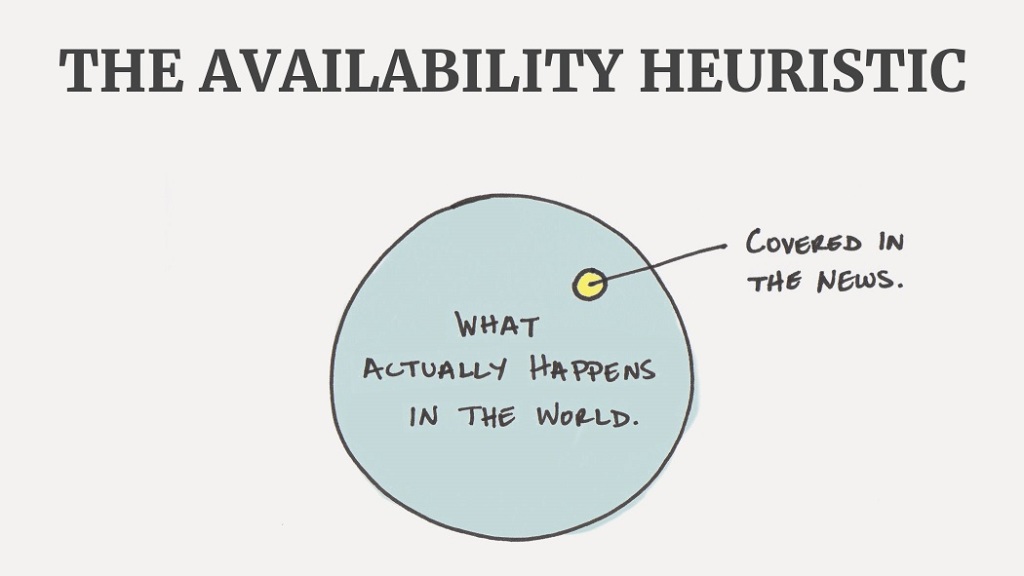

For lovers of psychology, this phenomenon is often referred to as the Availability Heuristic.

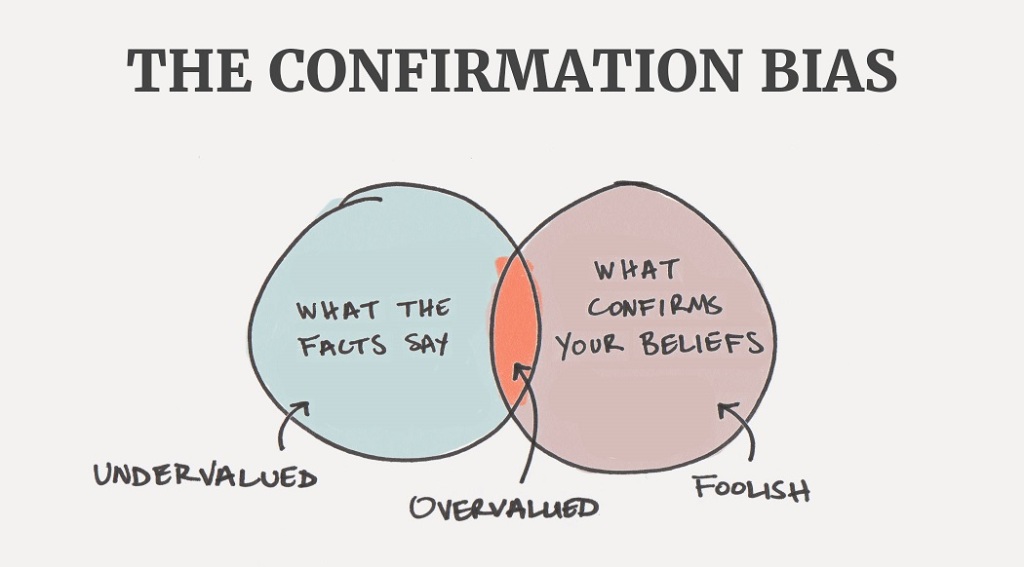

The more easily we can retrieve a certain memory or thought – that is, the more available it is in our brains – the more likely we are to overestimate it’s frequency and importance. The Illusory Correlation is sort of a combination of the Availability Heuristic and Confirmation Bias.

You can easily recall the one instance when something happened (Availability Heuristic), which makes you think it happens often. Then, when it happens again – like the next full moon, for example – your Confirmation Bias kicks in and confirms your previous belief.

Content Curated By: Dr Shoury Kuttappa.